我是这样度过每一天

----向死而生-----

折返点 1737 遭遇猫头鹰

因为白天的时间都花在陪孩子,周末的时候我常常夜跑,在家附近的绿湖。 绿湖在白天的时候总是非常繁忙,有许多人来来往往。白天跑步,要不时的闪避对面的...

-

新爸五年计划 | 013 开学季

小柔三岁了。她开始一周两次的幼儿园。

上半年的时候,她就开始上幼儿园了。那时,她上的是一个每周一次,每次半天的合作社式的幼儿园(CO-OP)。所谓合作社式,是指家长们合伙租场地,请老师,来给孩子们“上课”。而幼儿园的日常运作,是由家长们负责的。每个家长都负责幼儿园的某一项任务。家长也全程陪同。

对于孩子来讲,那就和跟着妈妈去图书馆听故事唱歌差不多。而且跟妈妈去图书馆还是没有两次的事,幼儿园只是一周一次。而且妈妈总是在附近,想妈妈了就能立即看到妈妈。

这个学期,我们还继续参加每周一次的Co-OP。

-

折返点 1736 Irvine

这个月要去Irvine两次。 这周周五一次,下周周四还有一次,都是在周六的时候就回家。 两次的目的有所分别,又非常相关。第一次是参加FCS病人的...

-

新爸五年计划 | 012 翻身仗

Icons made by Freepik from www.flaticon.com is licensed by CC 3.0 BY

在生命的最初几年里,新生儿要大密度的集中经历非常多的第一次。他们的认知发展,身体成长的速度往往令人咂舌。父母最直观的感受,就是你一不注意,小孩子就又有了新的技能。

小锐的新技能就是,学会翻身了。

-

折返点 1735 新耳机

之前抱怨坏掉的蓝牙耳机,在我联系了卖家客服后,给我退了全款。 虽然耳机的质量有问题,但是不得不说客服还是很靠谱的。我通过电子邮件和客服联系,说明...

-

新爸五年计划 | 011 我的智商去哪儿了

Photo by Nik Shuliahin on Unsplash

你看,我一个平时沉默寡言的人,常常就被这孩子弄成个唠唠叨叨的样子,连我自己都不喜欢自己了。

“女儿,回家进门后要先洗手哦。”

——“不行,我先别洗啦。……”

“女儿,你把玩具收起来吧,吃饭来吧”

——“不行,我还要在玩儿一会儿呢,……”

“不要用手摸弟弟的眼睛啊,会疼的”

——“不行……”

-

折返点 1734 600天与新鞋

本星期中的八月二十二日,是我持续跑步的地六百天。 这一天,我又换上了一双新跑鞋。 旧跑鞋因为表面和内部的破损,而不得不退役了。我同时也怀疑,上一...

-

新爸五年计划 | 010 陪睡

Photo by Annie Spratt on Unsplash

这个星期之前,小柔睡觉,都是让妈妈陪的。

这个星期之后,小柔睡觉,我也可以哄了。

哦,这似乎不对。小柔还是小婴儿的时候,我也曾哄她睡觉。

-

折返点 1733 耳机与脚底板

耳机又坏了 为什么要说又呢?因为不是第一次了。 我在16年12月份买了一副蓝牙耳机,耳机的型号是 TT-BH16 TaoTronics Blu...

-

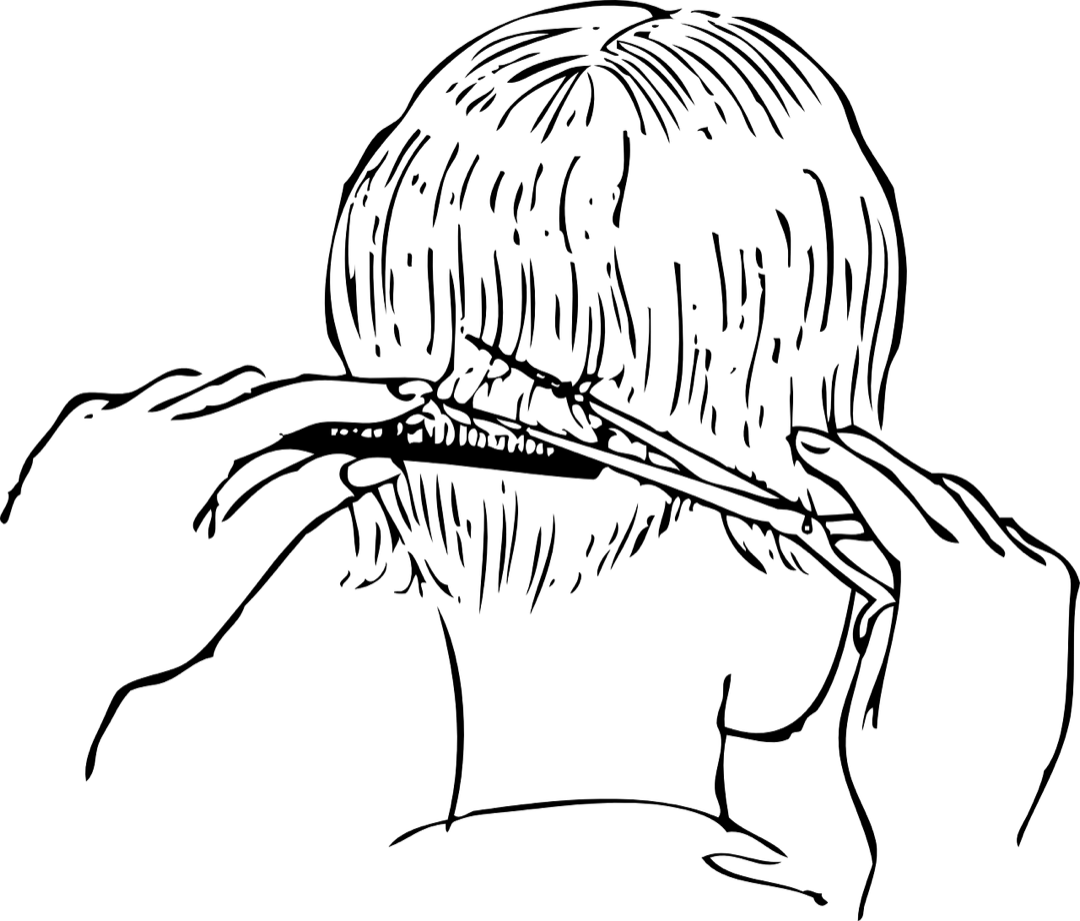

新爸五年计划 | 009 理发

小柔剪头发了,剪得很短。短的程度,比我上高中时学校要求女生的齐耳短发还要短些。她的发型似乎仍然能被称作是齐耳短发——是齐耳短发中偏短的那种。今年夏天,奶奶似乎也是这样的发型。

剪发的决定是姥姥和妈妈做出的。我下班回家看到之后,内心是惊喜惊交织的。早晨出门还是长发的小姑娘,回来就变得跟奶奶一个样子了。一看到她就想起自己的妈,不知道是该笑还是不该笑。

不过我还是笑出声了。短发也很清爽嘛!